SEO Health Checks - Regular Housekeeping Tasks for Your Website's SEO

The author's views are entirely their own (excluding the unlikely event of hypnosis) and may not always reflect the views of Moz.

Technical problems, errors and surprise releases are all regular features in the day to day management of a website when you’re an SEO. There’s no doubt that maintaining a quick, error free and well optimised site can lead to long term traffic success. Here are some of my tips for regular checks you should be doing to stay on top of your website to maximise your search engine performance.

General Error Checking

General errors can crop up continually with any website and left unchecked, their volume could spiral out of control. Working on improving and resolving large numbers of 404 and timeout errors on your site can help search engines minimise the bandwidth used to completely crawl your site. It’s arguable that minimising crawl errors and general accessibility issues can help get new and updated content into search engine indexes more quickly and often, a good thing for SEO!

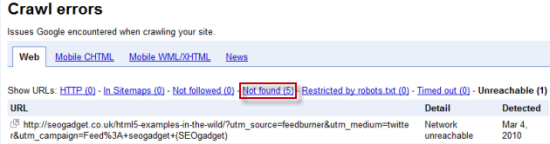

If you want to get smart with error handling and other crawl issues, start by getting a Google Webmaster Tools account. Take a look at “Crawl errors” found via the “diagnostics” panel after you’ve verified your site:

Paying particular attention to the “Not found” and “Timed out” reports, it’s wise to test each error with a http header checker online or using a Firefox plug-in such as Live Http Headers or Http Fox. I find that drilling down into the first 100 or so errors, you tend to find a common pattern with many that lead to only a few fixes being required. I like to focus on 404 error pages that have external links first to get maximum SEO value from legacy links.

It’s important to note that sometimes, there’s more to an error report than just the URL listed in the console. I’ve found issues such as multiple redirects ending in a 404 error which is important information to brief your developers, potentially saving them a lot of diagnostics time.

As a side note, be careful how you interpret the “Restricted by robots.txt” reports. Sometimes, those URL’s aren’t directly blocked by robots.txt at all! If you’ve been scratching your head about the URLs in the report, run the http header check. Often, a URL listed in this report is part of a chain of redirects that ends or contains a URL that is blocked by robots.txt.

For extra insight, you should try the IIS SEO Toolkit or running the classic Xenu’s Link Sleuth Crawl both of which can reveal a number of additional problems. Tom wrote a nice article on Xenu and amongst his tips, setting the options to “Treat redirections as errors” is one of my favourites. As well as internal crawl error checking, a site of any size should try to avoid redirects via internal links. From time to time, using Fetch as Googlebot inside Webmaster tools or browsing your site with JavaScript and CSS disabled using Web Developer Toolbar with your user agent set to Googlebot can also reveal hidden problems.

Linking Out to 404 Errors?

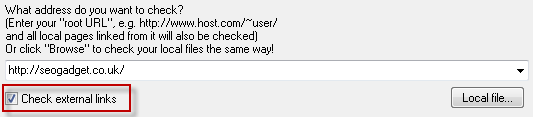

Linking out to expired external URLs isn’t great for user experience, and implies perhaps that as a resource, your site is getting out of date. Consider checking your outbound external links for errors by using the “Check external links” setting in Xenu.

Canonicalisation

You spent time and effort specifying rules for canonicalized URLs across your site, but when was the last time you checked the rules you painstakingly devised are still in place? Thanks to the ever evolving nature of our websites, things change. Redirect rules can be left out of updated site releases and your canonicalization is back to square one. You should always be working towards reducing internal duplicate content as a best practice gesture, and without solely relying on the rel=”canonical” attribute.

Checking the following can quickly reveal if you could have a problem:

- www or non www redirects (choose either, but always use a 301)

- trailing slash (choose to leave out like SEOmoz, or in, like SEOgadget but don’t allow both)

- Case redirects – a 301 redirect to all lower case URLs can solve a lot of headaches or title case redirects if you want to capitalise place names like some travel sites do

“Spot checks” of Front End Code, Missing Page Titles and Duplicate Meta

Just every now and again, it’s nice to take another look at your own code. Even if you don’t find a problem that needs fixing, you might find inspiration to make an enhancement, test a new approach or bring your site up to date with SEO best practice.

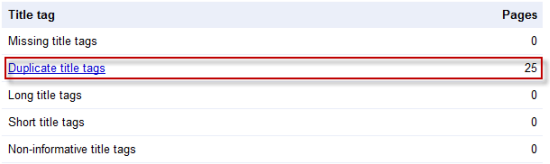

One quick check I find useful is under “Diagnostics” > “HTML suggestions” in Webmaster tools:

Duplicated title tags or meta descriptions or both can reveal problems with your dynamic page templates, missed opportunities or canonicalization issues.

Site Indexation

Site indexation, or the number of pages that receive one visit or more from a search engine in a given period of time, is a powerful metric to quickly assess how many pages on your site are generating traffic.

Aside from the obvious merit in tracking site indexation over time as an SEO KPI, the metric can also reveal unintended indexing issues like leaked tracking or exit URLs on affiliate sites or huge amounts of indexed duplicate content. If the number of pages Google claims to have indexed on your site is vastly different to the site indexation numbers you’re seeing through analytics, you may have found a new problem to solve.

Indexed Development / Staging Servers

Is your staging or development server accessible from outside your office IP range? It might be worth checking that none of your development pages are cached by the major search engines. There’s nothing worse than discovering a ranking development server URL (it does happen!) with dummy products and prices in the database. You just know that customer is going to have a bad time on a development server! If you discover an issue, talk to your development team about restricting access via IP to the staging site or consider redirecting search engine bots to the correct version of your site.

Significant / Recent Changes to Server Performance

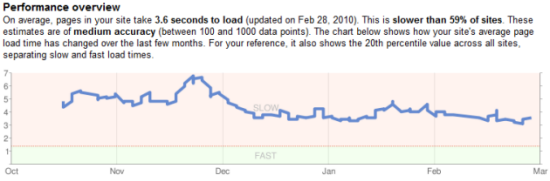

Google have put a lot of effort into helping webmasters identify site speed issues and it could make a lot of sense to keep a regular check on your performance if you’re not doing so already. There are a few useful tools out there to help you speed up your site, starting with Google’s “Site performance” reported located under “Labs” in Webmaster tools:

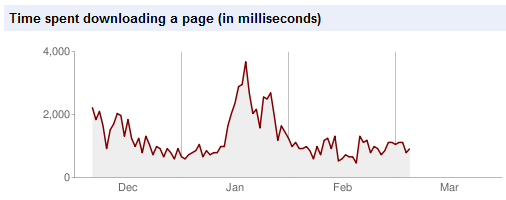

It’s good to check out the “Time spent downloading a page (in milliseconds)” report found under “Diagnostics > Crawl stats” in Webmaster tools, too:

Tackling search engine accessibility issues like errors and canonicalization problems is a really important part of your SEO routine. It’s also a favourite subject of mine! What checks do you carry out regularly to manage the performance of your website? Do you have your own routine? If you manage a large site, or many large sites, what "industrial strength" tools or automated processes do you gain the most insight from?

This is a post by Richard Baxter, Founder and SEO Consultant at SEOgadget.co.uk - a performance SEO Agency specialising in helping people and organisations succeed in search. Follow him on Twitter and Google Buzz.

Comments

Please keep your comments TAGFEE by following the community etiquette

Comments are closed. Got a burning question? Head to our Q&A section to start a new conversation.